AI-Powered Cyber Attacks Are Redefining Financial Compliance—Are You Ready?

For years, financial institutions have been strengthening their cybersecurity defenses, layering firewalls, deploying fraud detection algorithms, and tightening access controls. But 2025 marks a turning point—one where these traditional security measures are proving inadequate.

The Menlo Security 2025 Report on Browser Security reveals an unsettling trend: AI is no longer just a tool for cyber defense—it has become a weapon for attackers. Cybercriminals are automating their attacks, using AI to bypass security filters, manipulate trust-based systems, and exploit browser vulnerabilities faster than institutions can patch them. The result? A growing wave of cyber threats that financial compliance teams are struggling to contain.

The financial industry has always been a prime target for cybercrime, but the nature of attacks is changing. AI is transforming cyber threats from static, predictable incidents into adaptive, real-time security challenges.Phishing sites now evolve dynamically, deepfake scams are becoming indistinguishable from real interactions, and compliance systems themselves are being manipulated by AI-driven attacks.

The question is no longer whether AI-driven cyber threats will impact financial institutions—it’s whether firms are prepared to detect and counter them before they become regulatory liabilities.

AI is Exploiting the Financial Industry’s Greatest Strength—Trust

Trust is the foundation of financial services. Customers rely on institutions to protect their assets, employees trust internal security systems, and regulators trust firms to maintain compliance. But AI-powered cyber threats are now weaponizing that trust against financial institutions.

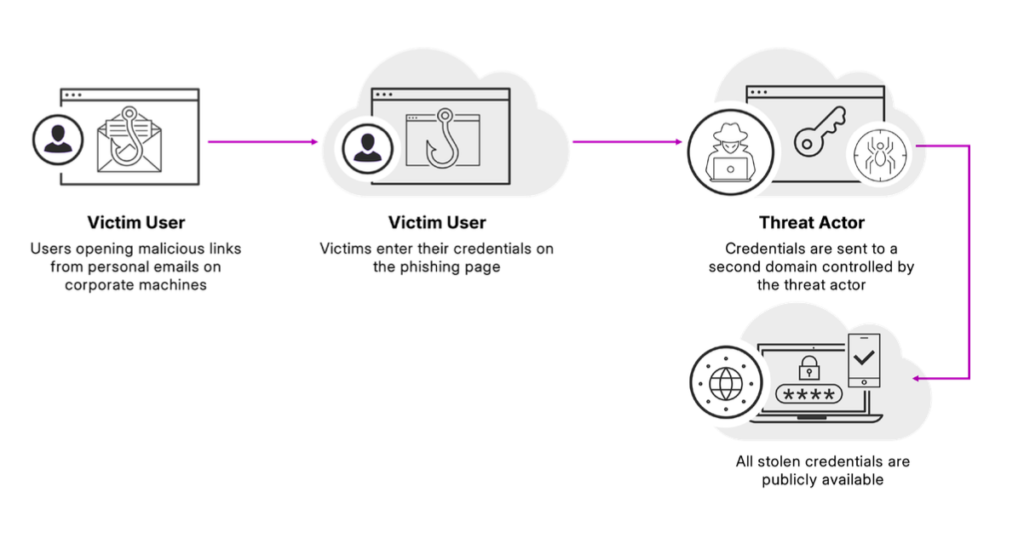

One of the most alarming revelations from the Menlo report is the 140% increase in browser-based phishing attacks in 2024, many of which utilized AI-generated deception techniques. Unlike traditional phishing, which relied on static fake websites, these new attacks:

- Generate personalized phishing pages in real time, adapting their appearance based on the victim’s browsing history and location.

- Use AI to create deepfake voices and videos, impersonating executives to authorize fraudulent transactions.

- Exploit brand impersonation at scale, making it nearly impossible for employees and customers to distinguish real communications from fake ones.

Multi-factor authentication (MFA) and email security filters—once considered strong defenses—are proving increasingly ineffective against these AI-driven threats. The financial sector is built on trust-based security models, but AI has completely redefined the rules of deception.

The “0.0.0.0 Day” Crisis—A Warning Sign for Financial Institutions

Among the most critical findings in the Menlo report is the discovery of the “0.0.0.0 Day” vulnerability, which exposes a fundamental weakness in how browsers process local API requests on macOS and Linux systems.

This is particularly concerning for financial institutions because browsers are now the primary interface for essential financial operations. Employees use them to access trading platforms, risk assessment tools, compliance dashboards, and cloud-based financial applications.

Yet, browsers have quietly become one of the most vulnerable entry points for AI-driven cyberattacks. The report highlights that:

- 80% of cyberattacks now originate from browser-based vulnerabilities, rather than traditional network breaches.

- AI is accelerating the discovery of zero-day exploits, allowing attackers to automate vulnerability detection before security teams can respond.

- Cloud-hosted phishing attacks have surged, with platforms like Cloudflare being misused to host malicious financial portals that appear entirely legitimate.

Financial firms that continue to focus exclusively on endpoint security while neglecting browser-based risks are leaving themselves exposed to a new era of AI-powered cyber threats.

AI is Turning Compliance Tools Into Attack Vectors

Financial institutions have invested heavily in AI-driven fraud detection and compliance automation, believing these tools would strengthen security. But the Menlo report warns that attackers are now using AI to manipulate the very systems designed to protect financial institutions.

One of the most troubling developments is AI poisoning, where cybercriminals inject manipulated data into machine learning models. This allows them to:

- Trick fraud detection systems into approving fraudulent transactions while blocking legitimate ones.

- Bypass KYC (Know Your Customer) verification by generating AI-powered synthetic identities that resemble real users.

- Automate security evasion techniques, continuously refining their attacks based on how AI-driven compliance systems respond.

This is not just a cybersecurity issue—it’s a regulatory compliance crisis. If AI-driven fraud detection tools can no longer be trusted, regulators will demand greater transparency, auditability, and explainability of AI-based security models.

Expect upcoming regulations to introduce:

- Mandatory AI security audits, ensuring that compliance models remain tamper-proof.

- Explainability standards, requiring firms to justify how their AI-driven fraud detection systems make decisions.

- Real-time AI threat monitoring, forcing institutions to adopt adaptive security models that can evolve as quickly as attackers innovate.

For financial firms, compliance is no longer just about meeting regulatory requirements—it’s about ensuring that AI-powered security tools remain resilient against AI-powered attacks.

The Rise of Invisible Cyber Threats—Why Traditional Security is Failing

Perhaps the most chilling prediction from the Menlo Security report is that 2025 will be the year of invisible cyberattacks. These AI-driven threats do not rely on traditional malware signatures. Instead, they manipulate browser sessions, cloud applications, and financial algorithms without leaving a trace.

Unlike conventional attacks that require hackers to deploy malicious code, invisible AI-driven cyber threats work by:

- Hijacking active browser sessions, taking control of financial accounts without needing to steal credentials.

- Embedding malicious scripts within trusted cloud platforms, making phishing sites appear indistinguishable from real financial portals.

- Using AI-powered brute-force attacks to predict and crack passwords at speeds that render traditional authentication obsolete.

The financial sector’s reliance on legacy security tools is making it increasingly vulnerable. Signature-based detection methods—designed to recognize known threats—are proving ineffective against AI-driven attacks that continuously evolve.

To counter this, financial firms must rethink their entire cybersecurity strategy. Legacy security models that focus on firewall defense and endpoint monitoring need to be replaced with AI-powered security solutions capable of detecting and adapting to real-time threats.

2025: The Year Financial Compliance Becomes a Cybersecurity Imperative

The Menlo Security report makes one thing clear: financial cybersecurity and compliance are now inseparable. AI-driven cyber threats are not just about data breaches—they are about regulatory survival.

Financial institutions must:

- Move beyond endpoint security and prioritize browser protection, as the majority of AI-driven attacks now exploit browser vulnerabilities.

- Redefine compliance frameworks to address AI security risks, ensuring that fraud detection models are continuously audited for manipulation.

- Adopt AI-driven cybersecurity defenses, as AI-powered threats can only be countered by AI-powered protections.

Regulators are already scrutinizing financial institutions’ use of AI for fraud detection, and firms that fail to implement AI security measures today will face both regulatory consequences and increased cyber risk tomorrow.

Final Thought: Compliance is No Longer Just About Regulations—It’s About Survival

The findings from the Menlo Security 2025 report highlight a stark reality: AI is permanently reshaping the cyber threat landscape for financial institutions. Attackers are no longer hacking networks—they are hacking trust, AI compliance models, and the very systems designed to detect them.

For financial firms, compliance is no longer a check-the-box regulatory function—it is now a critical cybersecurity frontline. Institutions that fail to update their AI security strategies will not only risk regulatory fines—they will become prime targets for AI-powered cybercrime.

Studio AM: Helping Financial Firms Navigate AI-Driven Compliance Challenges

At Studio AM, we specialize in compliance-as-a-service (CaaS), helping financial institutions, fintech firms, and regtech providers navigate the evolving landscape of AI-driven cybersecurity risks.