AI Regulation in Finance: The Compliance Storm Has Arrived—Are You Ready?

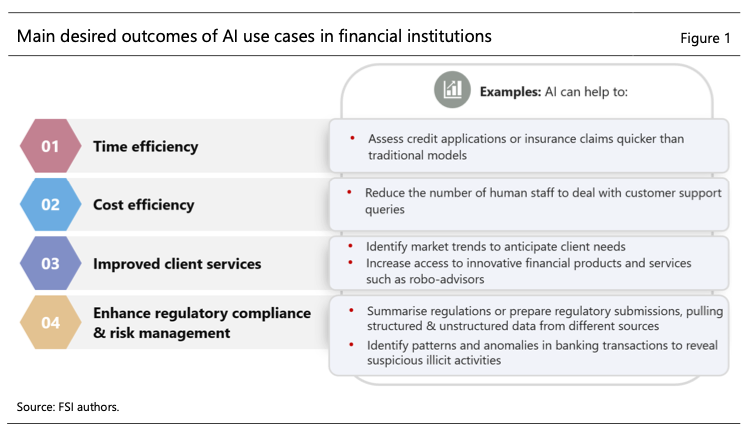

The financial industry is facing a regulatory shift unlike any before. Artificial intelligence (AI) has moved from being a tool for efficiency to a compliance minefield, and supervisors worldwide are rapidly stepping in to impose new governance requirements.

In December 2024, the Financial Stability Institute (FSI) of the Bank for International Settlements (BIS)published its analysis on AI regulation in finance (FSI Insights No. 63). Just three months later, we are already seeing many of its predictions materialize. The European Union AI Act has classified AI-driven credit scoring and insurance underwriting as high-risk, demanding that financial institutions justify AI-generated decisions and meet strict explainability standards. Meanwhile, the United States, UK, Singapore, and China are moving forward with their own AI governance frameworks, each focusing on accountability, transparency, and risk mitigation.

The FSI report makes one thing clear: AI regulation is no longer broad and theoretical—it is becoming specific and enforceable. Financial institutions that fail to adapt will soon find themselves legally exposed, operationally constrained, and reputationally damaged.

📖 Ref: Financial Stability Institute (FSI) of the Bank for International Settlements (BIS) (2024).Regulating AI in the financial sector: recent developments and main challenges

The Regulatory Landscape Is Changing—Fast

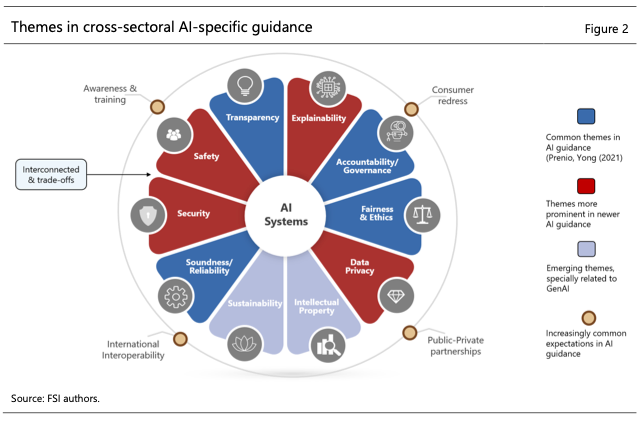

For years, financial regulators took a technology-neutral approach, applying existing governance and risk management frameworks to AI without creating AI-specific rules. That approach is ending. The FSI report signals a clear shift: AI is now seen as a systemic risk factor, requiring direct regulatory intervention.

This is particularly evident in credit underwriting and insurance risk assessment, where AI-driven decisions can have a direct impact on consumers, financial stability, and market conditions. Regulators are no longer comfortable with AI models operating as black boxes—they want financial firms to provide clear, auditable justifications for AI-driven decisions.

- The EU AI Act is already setting a precedent, requiring AI models in finance to meet explainability, fairness, and robustness standards. Financial institutions using AI for risk assessment must now conduct real-time model monitoring, ensuring that their AI-driven decisions remain compliant and non-discriminatory over time.

- In the United States, the Financial Stability Oversight Council (FSOC) has flagged AI as a potential systemic risk, suggesting that AI failures could trigger market-wide shocks.

- The UK’s Financial Conduct Authority (FCA) is taking a rules-light but oversight-heavy approach, requiring firms to self-regulate under constant supervisory scrutiny.

- Singapore and China are moving toward increased AI oversight, with China already enforcing AI content regulations and Singapore’s Monetary Authority of Singapore (MAS) expected to expand its AI compliance requirements.

With regulators across jurisdictions aligning on AI oversight, financial institutions should assume that compliance expectations will only become stricter and more complex in the near future.

AI’s Hidden Risks: What Regulators Are Watching Closely

Financial institutions have spent years refining AI models for efficiency, fraud detection, and credit risk assessment, but regulators are now focusing on hidden systemic risks that many compliance teams have yet to fully address. The FSI report highlights two major concerns that financial institutions must act on immediately.

- AI-Induced Herding Behavior Could Destabilize Financial Markets

Financial institutions are increasingly training their AI models on similar datasets and deploying them in similar ways. This synchronization of AI-driven decision-making creates a procyclical effect, where AI models:

- Withdraw credit simultaneously during economic downturns, leading to credit freezes and amplifying financial instability.

- Over-lend during economic booms, inflating asset bubbles and increasing systemic risk.

If left unchecked, AI-driven herding could exacerbate financial cycles, making downturns more severe and bubbles more extreme. The FSI report suggests that regulators may soon introduce AI-specific stress testing requirements, forcing financial institutions to demonstrate that their AI models do not contribute to systemic risk under extreme market conditions.

- Third-Party AI Dependencies Are Creating Concentration Risks

Many financial institutions do not build their own AI models. Instead, they rely on third-party AI service providers, including big tech firms and fintech vendors. While this approach accelerates AI adoption, it creates a major concentration risk—one that regulators are now scrutinizing.

- If a critical AI provider suffers an outage or cyberattack, multiple financial institutions could experience simultaneous AI model failures, disrupting everything from fraud detection to credit approvals.

- As regulators move toward direct oversight of AI service providers, financial institutions will be held fully accountable for ensuring compliance of outsourced AI solutions.

- The FSI report predicts that future regulations will require financial institutions to conduct AI-specific audits on their third-party providers, ensuring that external AI models meet the same transparency and risk standards as in-house systems.

With AI becoming more interconnected, regulators will no longer accept a passive approach to third-party risk management. Financial institutions must strengthen AI vendor oversight now, before regulators impose stricter controls.

Where AI Regulation Is Headed: The Next Compliance Challenges

The FSI report provides an early roadmap for where AI regulation is going, but financial institutions should prepare for even more disruptive compliance trends in the coming years.

- AI risk management will be mandated, not optional. Regulators will require standardized risk controls, explainability audits, and real-time AI model validation.

- Third-party AI vendors will face direct regulatory scrutiny. Financial institutions will need to conduct due diligence on AI providers, ensuring external models comply with emerging AI governance standards.

- Explainability requirements will intensify. AI models used for lending, fraud detection, and insurance underwriting will need to provide clear, auditable justifications for their decisions.

- AI stress testing will become part of regulatory oversight. Financial institutions will need to demonstrate how their AI models behave under extreme market conditions, ensuring that AI-driven decisions do not amplify financial instability.

- AI-specific compliance frameworks will emerge. Similar to Basel III for banking risk, regulators may introduce AI model certification requirements, regulatory reporting obligations, and ongoing supervisory reviews.

Financial institutions that fail to anticipate these regulatory developments risk being caught off guard—and penalized—for non-compliance.

Why AI Compliance Is No Longer Just a Legal Obligation—It’s a Competitive Advantage

The FSI report signals a new era in financial regulation—one where AI governance is no longer an afterthought but a core compliance requirement. Financial institutions that fail to align their AI strategies with emerging regulations will face significant legal, operational, and reputational risks. However, those that proactively integrate AI governance, strengthen third-party oversight, and embrace transparency will gain a competitive edge in an AI-driven financial landscape.

At Studio AM, we provide AI compliance solutions tailored for financial institutions, fintechs, and regtechs. Our expertise spans AI risk assessments, explainability audits, third-party AI governance, and regulatory reporting frameworks, ensuring that your AI-driven operations remain compliant, secure, and future-proof.

🚀 The AI compliance storm has arrived—are you prepared? Contact us today to build an AI governance strategy that stands the test of regulation.