AI in the Front Office: Navigating the Model Risk and Operational Resilience Blind Spots

November 12, 2025

Read the full original report here:

Hong Kong Private Wealth Management Report 2025

Introduction

The 2025 Hong Kong Private Wealth Management Report sends an unequivocal message: artificial intelligence (AI) is no longer a fringe concept but the top investment theme for clients[1]. The report highlights a surge in the adoption of AI-driven tools, from voice-to-text solutions to automated client onboarding and advanced data analytics. For private wealth management (PWM) firms in Hong Kong and beyond, the race is on to integrate AI into the front office to enhance client engagement, personalize services, and drive operational efficiency. However, this gold rush towards AI-powered innovation conceals a complex and perilous landscape of hidden risks. For the Chief Compliance Officer (CCO) and Chief Risk Officer (CRO), the critical challenge is not if but how to navigate the intertwined challenges of model risk and operational resilience that accompany these powerful new technologies.

This analysis moves beyond the hype to provide a clear-eyed assessment of the compliance and risk management imperatives for PWM firms deploying AI in client-facing functions. We will dissect the specific model and operational risks that arise, conduct a cross-jurisdictional comparison of regulatory expectations in the United Kingdom, the United States, Japan, and Hong Kong, and conclude with a set of actionable recommendations for building a robust AI governance framework. The central thesis is this: without a proactive and deeply integrated approach to risk management, the promised gains of front-office AI can quickly be eclipsed by regulatory sanctions, reputational damage, and catastrophic operational failures.

The Illusion of Seamlessness: Unpacking AI’s Front-Office Risks

The allure of AI in the front office lies in its promise of a seamless, hyper-personalized client experience. However, beneath this veneer of efficiency lies a host of risks that can have a profound impact on a firm’s compliance and operational integrity.

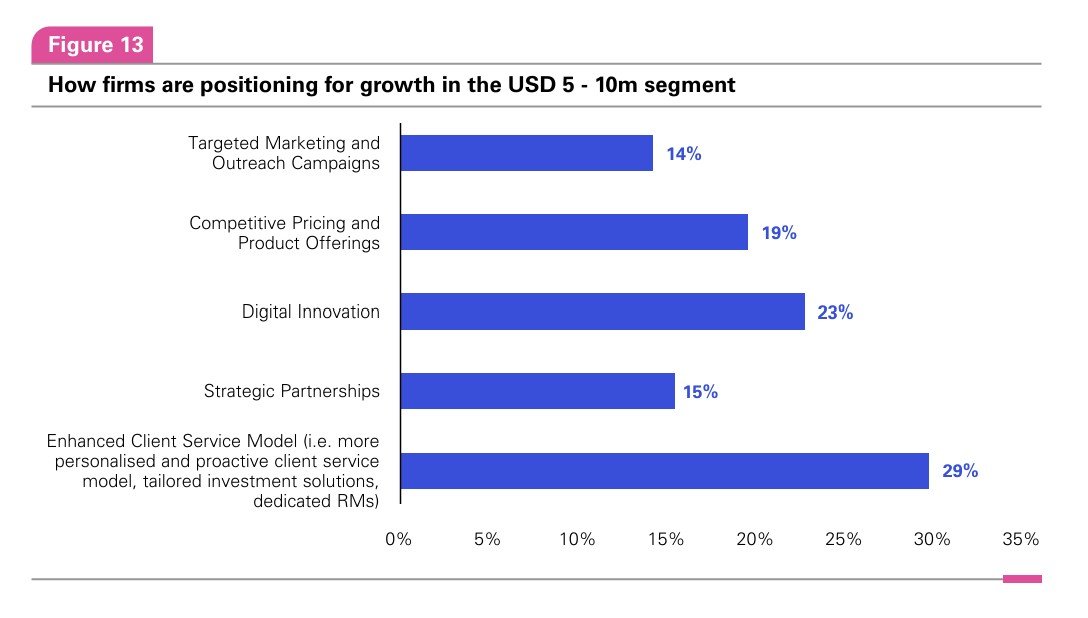

The Hong Kong PWM report notes that 29% of firms are building more personalized engagement models for the next generation of clients, while 23% are investing in digital innovation[1]. These initiatives, while commercially sensible, introduce new vectors of risk that traditional compliance frameworks are often ill-equipped to handle.

Source: KPMG

Model risk, the potential for adverse consequences from decisions based on incorrect or misused model outputs, is magnified in the context of AI. Unlike traditional statistical models, AI models, particularly those based on machine learning, can be opaque, complex, and dynamic. When deployed in the front office for tasks such as robo-advisory, automated suitability assessments, or even sentiment analysis of client communications, the potential for harm is significant.

Algorithmic bias, where a model systematically produces unfair outcomes for certain demographics, can lead to violations of fair lending and anti-discrimination laws. Data drift, where the statistical properties of the data used to train the model change over time, can lead to a silent degradation in model performance, resulting in unsuitable recommendations or flawed risk assessments. The “black box” nature of many advanced AI models poses a significant challenge to the core regulatory expectation of explainability, making it difficult for firms to justify their decisions to clients and regulators.

Beyond the model itself lies the often-underestimated challenge of operational resilience. The increasing reliance on AI, especially from third-party vendors, creates new dependencies and points of failure. A recent study found that banking organizations with higher AI investments are exposed to more operational risk[2].

An outage or malfunction of a critical AI system, such as a client onboarding or transaction monitoring tool, can bring business operations to a halt, leading to significant financial losses and client harm. The integration of AI from multiple vendors can create a complex and fragmented technology stack, making it difficult to identify the root cause of failures and coordinate a response. Furthermore, the very nature of AI, with its ability to learn and adapt, can lead to emergent risks that were not anticipated during the initial design and testing phases.

A 2025 report by Dun & Bradstreet revealed that more than half of firms admitted to failed AI projects, and 64% lacked confidence in their data for decision-making and risk management[3], underscoring the gap between ambition and execution.

A Global Regulatory Mosaic: Cross-Jurisdictional Perspectives on AI Risk

As financial institutions globally grapple with the risks of AI, regulators in key financial hubs are developing their own approaches to supervision. While a harmonized global framework remains a distant prospect, a review of the current thinking in the UK, US, and Japan reveals a set of common principles and emerging expectations that can guide PWM firms in Hong Kong.

| Jurisdiction | Key Regulatory Body | Core Approach | Stance on AI Definition | Key Documents |

|---|---|---|---|---|

| United Kingdom | FCA / PRA | "Principles-based, Technology-neutral, Outcomes-focused" | Against a specific definition | "FS2/23, SS1/23 (Model Risk)" |

| United States | OCC / Federal Reserve | "Risk-based, applying existing frameworks" | "Technology-neutral, no specific definition" | SR 11-7 (Model Risk) |

| Japan | FSA | "Supportive of innovation, dialogue-based" | "Against a specific definition, recognizes unique AI traits" | AI Discussion Paper (2025) |

| Hong Kong | HKMA / SFC | "Risk-based, principles-based, consumer protection focus" | "No specific definition, but focus on GenAI traits" | HKMA & SFC Circulars (2024) |

The United Kingdom: Principles and Proportionality

The UK’s Financial Conduct Authority (FCA) and Prudential Regulation Authority (PRA) have adopted a technology-neutral and principles-based approach. In their feedback statement on AI and machine learning (FS2/23), the regulators noted that most respondents did not favor a specific regulatory definition of AI, fearing it would quickly become outdated[4].

Instead, the focus is on outcomes and ensuring that firms manage the risks associated with AI within existing regulatory frameworks.

The PRA’s Supervisory Statement on model risk management (SS1/23) is a case in point.

While not AI-specific, its principles—covering model identification and risk classification, governance, model development, implementation and use, and independent model validation are seen as sufficiently robust to cover AI models. The regulators have also emphasized the importance of the Senior Managers and Certification Regime (SM&CR) in ensuring clear accountability for AI-related risks.

The United States: Extending a Mature Framework

The US has the most established framework for model risk management in the form of the Federal Reserve’s and the Office of the Comptroller of the Currency’s (OCC) Supervisory Guidance on Model Risk Management (SR 11-7), issued in 2011[5]. This guidance, which predates the current AI boom, provides a comprehensive framework for model validation, governance, and ongoing monitoring that is being applied to AI and machine learning models.

The OCC has been particularly active in clarifying its expectations for AI, emphasizing the need for banks to manage the full lifecycle of models and to ensure fairness, transparency, and explainability.

While the core principles of SR 11-7 remain relevant, there is a growing recognition, both from industry and regulators, that the unique characteristics of AI may require further clarification, particularly around issues of bias, interpretability, and the use of alternative data.

Japan: Balancing Innovation with Prudence

Japan’s Financial Services Agency (FSA) has taken a notably forward-looking stance. In its 2025 AI Discussion Paper, the FSA not only acknowledged the risks of AI but also explicitly highlighted the “risk of inaction”—the potential for a long-term decline in the quality of financial services due to technological stagnation[6].

The FSA is promoting a dialogue-based approach, working with financial institutions through forums to foster the “sound utilization” of AI.

While also favoring a technology-neutral approach, the FSA has indicated that it is prepared to review and revise laws and guidelines where the unique characteristics of AI warrant special consideration. This approach reflects a desire to balance the promotion of innovation with the need for robust risk management, a perspective that is particularly relevant for a dynamic financial center like Hong Kong.

Hong Kong: A Pragmatic Embrace of “Human in the Loop”

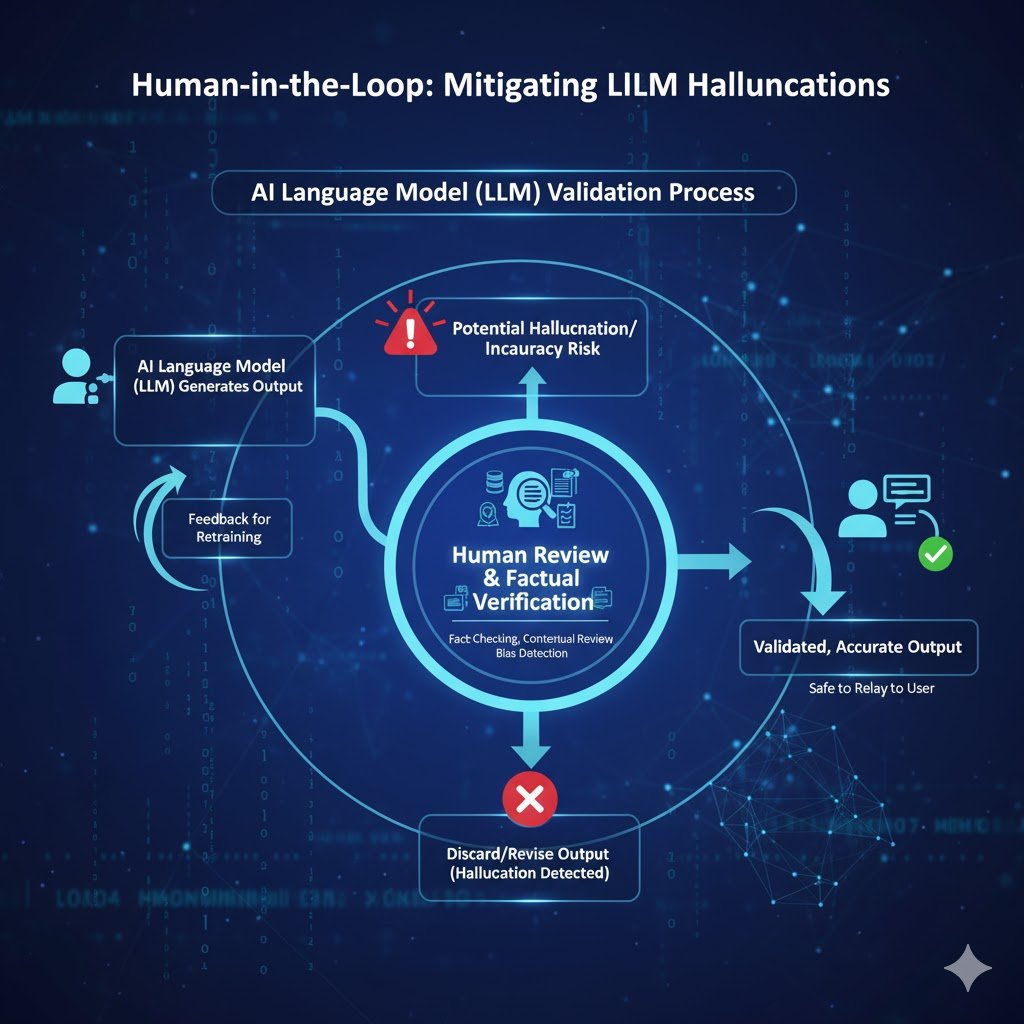

As a global financial hub directly grappling with the opportunities and risks of AI, Hong Kong’s regulators have adopted a notably pragmatic and principles-based approach. Rather than issuing a single, overarching AI law, the Hong Kong Monetary Authority (HKMA) and the Securities and Futures Commission (SFC) have extended existing regulatory frameworks, issuing specific guidance on Generative AI that emphasizes consumer protection and senior management accountability. This approach is particularly noteworthy for its explicit and mandatory inclusion of a “human-in-the-loop” mechanism for high-risk applications.

In its August 2024 circular on the use of Generative AI, the HKMA mandated that for customer-facing applications, firms must adopt a “human-in-the-loop” approach during the early stages of deployment[7]. This requires a human to retain control in the decision-making process to ensure that model-generated outputs are accurate and not misleading.

Source: AI-generated

Similarly, the SFC’s November 2024 circular on AI language models identifies the provision of investment recommendations and advice as “high-risk use cases” and explicitly requires a “human in the loop to address hallucination risk and review the AI LM’s output for factual accuracy before relaying it to the user”[8]. This focus on human oversight is a direct response to the specific risks posed by generative AI, such as hallucination and bias.

It reflects a regulatory philosophy that, while supportive of innovation, places the ultimate responsibility for fairness, accuracy, and suitability squarely on the shoulders of the firm and its senior management.

For PWM firms in Hong Kong, this means that simply deploying a third-party AI tool is not enough; they must build and document a robust supervisory and validation process that includes meaningful human intervention at critical points in the client journey.

The Path Forward: Actionable Recommendations for Risk Leaders

Navigating the complex landscape of AI risk requires a proactive and multi-faceted approach. For CCOs and CROs at PWM firms, the starting point is to recognize that AI is not simply an IT issue but a strategic challenge that requires a firm-wide response.

The following actionable recommendations can serve as a roadmap for building a robust AI governance and risk management framework:

Conclusion

The integration of AI into the front office of private wealth management is an irreversible trend, one that promises significant benefits for firms and their clients.

However, as the 2025 Hong Kong PWM Report makes clear, the industry is at a critical juncture.

The rush to innovate must be tempered by a clear-eyed and proactive approach to risk management.

By learning from the emerging regulatory consensus in leading financial centers and by taking concrete steps to enhance their governance, model risk management, and operational resilience frameworks, PWM firms can navigate the blind spots of AI and realize its full potential, safely and responsibly.

References

[1] KPMG & Private Wealth Management Association (2025) - Hong Kong PWM Report. https://kpmg.com/cn/en/home/insights/2025/11/hong-kong-private-wealth-management-report-2025.html

[2] McLemore, P. (2025) - Boston Fed research on AI and operational losses. https://www.bostonfed.org/-/media/Documents/events/2025/stress-testing-research-conference/McLemore_AIandOpLosses.pdf

[3] Dun & Bradstreet (2025) - AI resilience trends. https://fintech.global/2025/11/10/dun-bradstreet-reveals-2025-resilience-trends/

[4] Bank of England & FCA (2023) - FS2/23 on AI and Machine Learning. https://www.bankofengland.co.uk/prudential-regulation/publication/2023/october/artificial-intelligence-and-machine-learning

[5] Federal Reserve & OCC (2011) - SR 11-7 Model Risk Management. https://www.federalreserve.gov/supervisionreg/srletters/sr1107.htm

[6] Japan FSA (2025) - AI Discussion Paper. https://www.fsa.go.jp/en/news/2025/20250304/aidp.html

[7] HKMA (2024) - GenAI Consumer Protection> Circular (Ref: B1/15C, B9/67C). https://brdr.hkma.gov.hk/eng/doc-ldg/docId/20241107-1-EN

[8] SFC Hong Kong (2024) - GenAI Language Models Circular (Ref: 24EC55). https://apps.sfc.hk/edistributionWeb/gateway/EN/circular/intermediaries/supervision/doc?refNo=24EC55